Article first published December 2010, updated March 2019

When timelines get short or budgets are tight, the process of testing email campaigns is often the first thing that is set aside.

This isn’t in reference to the repetitive testing that goes into making a design look consistent across Outlook and Gmail, but in optimizing the results from your sends, such as maximizing your open rate with a proven subject line or selecting a solid call to action.

Read on to discover 4 simple ways to optimize them and make sure your campaign results are up to your standards.

Test #1: Picking an effective subject line

Subject lines are the most popular element of an email design to test because it’s very easy to do and designers may have trouble deciding on what to use. More importantly, the subject line plays a big part in determining whether the email gets opened or not. And, with more opens, you’re likely to get more clicks.

For example, in last month’s newsletter, we had two subject lines in mind:

In an A/B split campaign, you test two different subject lines by sending two versions of the same email—but each with a unique subject line—to a small portion of subscribers. As this small portion of your list engages with your A/B test, you can see which of the two subject lines receives a better reaction and garners the best results. Once the test is complete and you know which subject line received the most opens, the most popular version is then automatically sent to the majority of subscribers.

According to the estimate in our A/B testing reports, Version A resulted in an additional 1,640 opens, or a 6% increase over Version B.

Most importantly, we can look at this alongside previous test results or use it as a starting point for future tests to determine what kinds of subject lines are most effective.

Test #2: A powerful call to action

Although a little more legwork than running an A/B split on a subject line, testing email content such as a call to action or button design is a great way to generate more clicks and maybe even learn a bit about what elements work best in your email newsletter.

The results can be compelling. As mentioned in Mark’s post, ‘I recommend (that) new email marketers test creative elements like adding call-to-action preheaders, call to action buttons and language, as these often provide big, immediate wins.’

Here is an example from earlier this year—an A/B split campaign that used two different calls to action (CTAs).

Both seemed like strong candidates, but the test results really set them apart:

The reports estimated that Version A received an estimated 51% increase in clicks to the CTA as a result of being sent. That’s the difference a line can make!

Test #3: Which image wins?

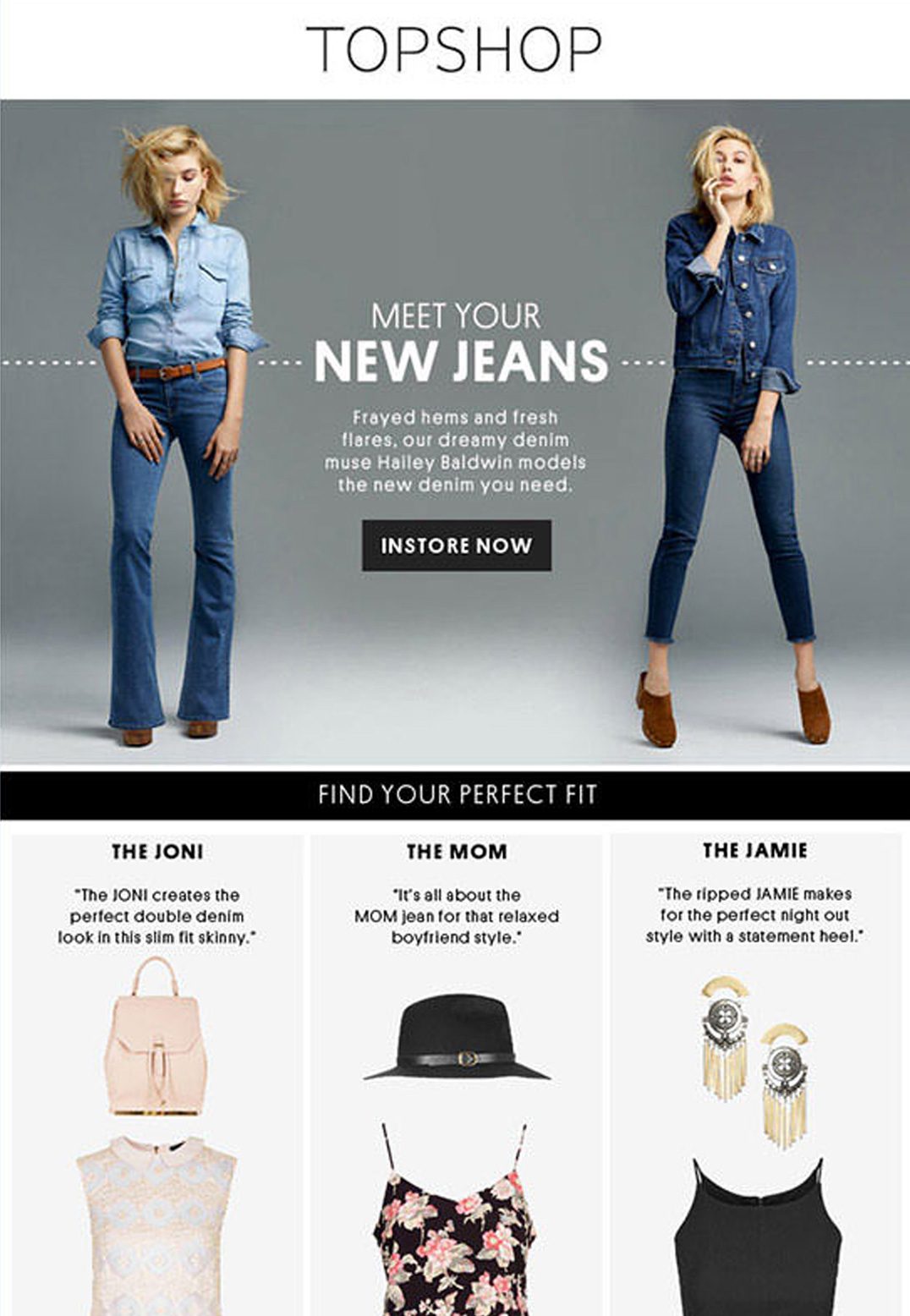

Another key suggestion from the post was to, “…test (product) images: these can produce a big lift and are easy to do.”

Like the above A/B split campaign, this also requires two versions of your email content, but as our friend, Anna Yeaman at Style Campaign observed from her A/B tests on animated vs. non-animated email newsletters, the results can be dramatic.

In her example, she created two versions of the same email, but one included an animated gif, while the other displayed a static frame instead:

After running an A/B split on email content, the winner (Version B) was sent and consequently racked up an estimated 26% increase in click-throughs – all thanks to the small animation above.

Test #4: Engagement and conversion rates

Conversion rate optimization, known regularly as CRO, is the process of using systematic means to increase the number of email readers that engage with your email. This engagement could mean they clicked on a link to buy a product or simply agreed to take a poll.

Whatever the case may be, there are many different ways to achieve optimal CRO. Here are a couple of the most popular methods used by email marketers.

1. CRO tools

When it comes to modern email marketing, tools are quickly becoming indispensable. Because they operate using AI, they can take care of menial tasks that would otherwise be a waste of a marketer’s talents and time.

They can also achieve certain results that would be possible by a human worker, such as communicating with the other programs to compile data. This data could include open rates, click rates, and revenue per subscription, just to name a few of the possibilities.

When it comes to CRO, specifically, email tools are incredibly helpful.

A/B Tasty, as an example, is one of the best tools out there for providing data insights, conversion rate optimization testing, nudge engagement and personalized experience.

Another of the top conversion rate optimization tools is Adobe Analytics, which won’t only offer tons of data regarding emails, but it also puts on emphasis on attribution. What this means is that if a consumer clicks on a link in an email that takes them to your business’ Twitter page and website, this tool will track that behavior.

With less developed analytic tools, Twitter would get the credit for bringing in traffic. Adobe Analytics goes back to find the original gateway.

2. CRO services

If you’re not sure if you want to use tools but still want to kickstart your CRO, you may consider conversion rate optimization services.

A CRO service is provided by certain companies that specialize in digital marketing. With these services, you won’t only get a tool, but an entire team of hard-working people who can either train you or take your email campaign into their hands.

Through a CRO service, you’ll also receive many of the same benefits that you would get from a tool. For instance, CRO services usually come with an analytics service of their own, as well as email customer support and automated emails.

CRO services will even offer drag-and-drop building tools, which means that you won’t go through the hassle of learning HTML or struggle with the interface of your email provider. With a drag-and-drop email builder, all you need is a vision, and the rest comes easy.

In addition to this, some of the higher end CRO services come with advanced timing optimization, advanced link tracking, and spam testing. Spam testing is a deceptively important part of email marketing because it’s actually quite easy for you emails to end up in spam folders.

Source: Campaign Monitor

Wrap up

So, we’ve featured some fairly simple examples here, but as the folks at Performable point out, A/B testing doesn’t necessarily have to be about small changes—you can also use A/B split tests to determine which email layouts work best, how the tone of copy affects response and more.

By testing two very different email designs, it is possible to, for instance, determine if a redesigned email newsletter is on the right track as a whole, but of course, this is quite different from determining which single CTA works best!

Also, it’s a good idea to not look at results from your tests in a silo, but set controls, look for trends and take into account the bigger picture. For example, variables such as the time of day or time of year can affect campaign results—especially now, during the holiday period—so learnings from June’s campaigns may not be as applicable when sending in December.

Finally, as we’ve seen above, testing doesn’t have to be a time-consuming process and ultimately, should be seen as an ongoing, recordable feature of your campaigns. Even if you’re simply making small changes to your subject lines, at least you’re making improvements—and learning a little something more each time you send.

These are all portions of optimizing your email for the results you need. Always remember to:

- Craft an effective subject line.

- Utilize an attention-grabbing call-to-action.

- Choose an image that testing supports as the better choice.

- Use conversion tools and services to improve engagement.

If you found this blog educational, you may like our blog about how email marketing differs from other types of digital marketing.